I’m just gonna say it: I’m getting old. I turn 50 this year. I arrived in the Valley in 1997. I’ve been doing networking for 25 years which shocks me to say. And I think I’ve done a pretty good job of keeping myself technically relevant. I study, I got my CWNE a few years ago…I mean I’m getting old but I’m not a fuddy duddy but…sometimes I wonder if I’m just ranting at kids to get off my lawn.

Last week at Mobility Field Day 9 we had not one but two vendors present “Cloud NAC”, or basically “RADIUS in the cloud”. Clearly this seems to be a “thing” now that we have to think about. And I have to wonder: Why? What’s the driver here? My gut tells me this is not a good idea. Is it just because of the aforementioned being old thing?

When it comes to RADIUS servers there are two big “products” – Cisco ISE and Aruba ClearPass. (Yes, there are others, such as Forescout’s platform and even FreeRADIUS, but that’s not the point.) I’ve played with both of them. I currently use ISE. (As I like to say – it’s not the worst piece of Cisco software I’ve used…but that list includes CMX and MSE so that’s a low bar.) And the more I work with it, the more I realize…ISE is its whole own thing. It’s a complex beast. I like to think I’m not stupid but I absolutely need adult supervision when playing with it. (Hi Jared!) ISE is not easy, nor is ClearPass, and I do think that part of the appeal of these cloud NAC platforms is that they try to take something that has traditionally been viewed as very complex and simplify it.

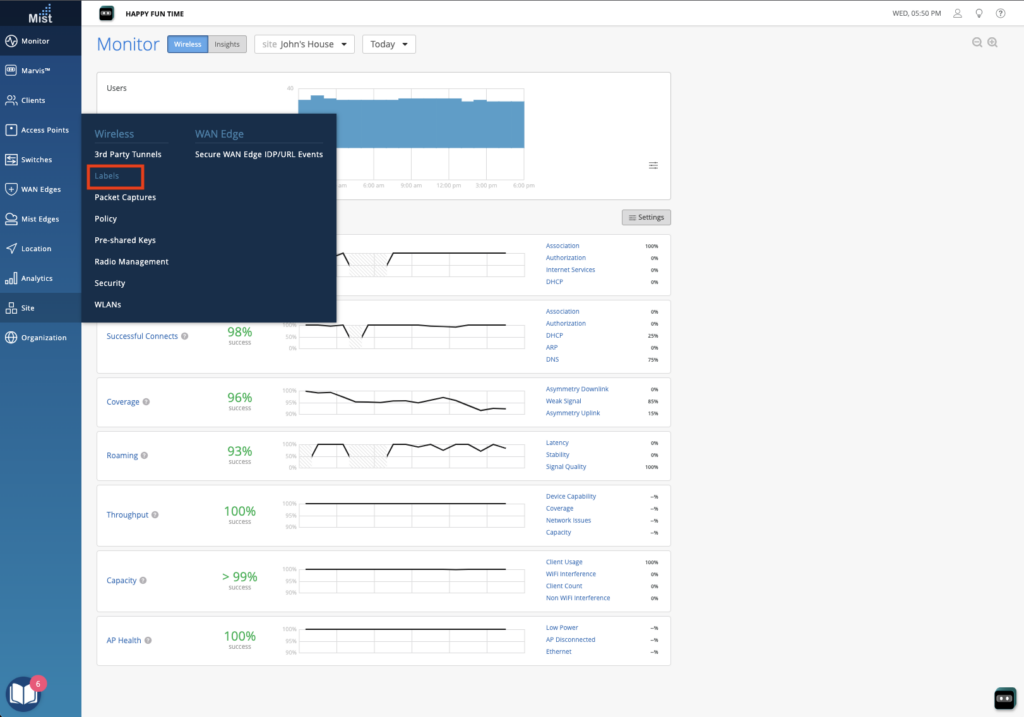

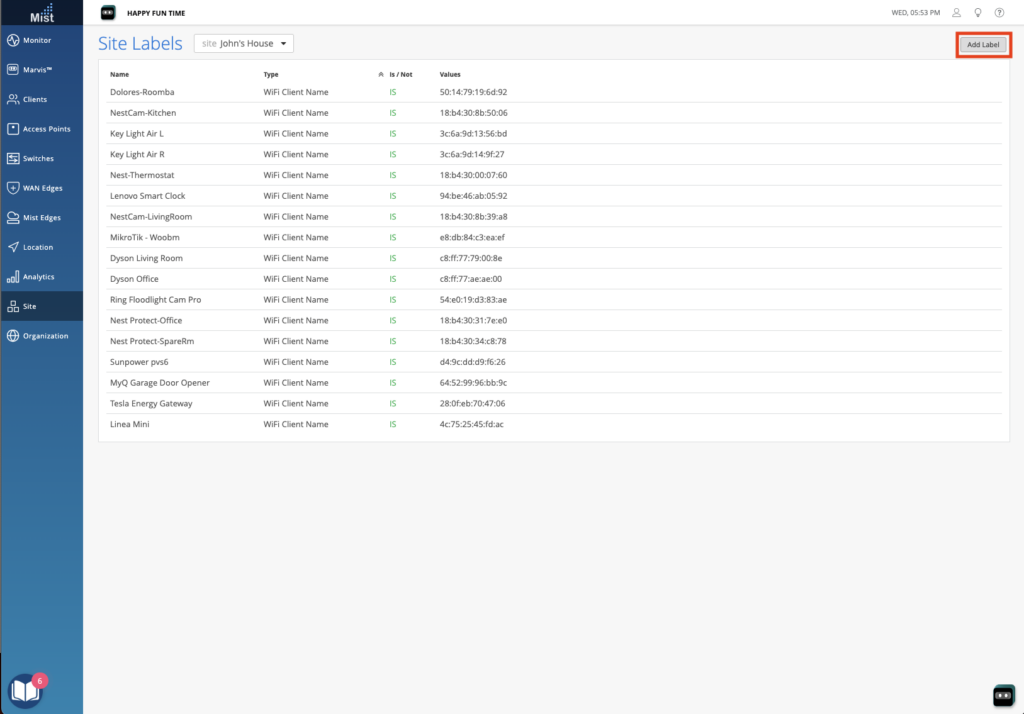

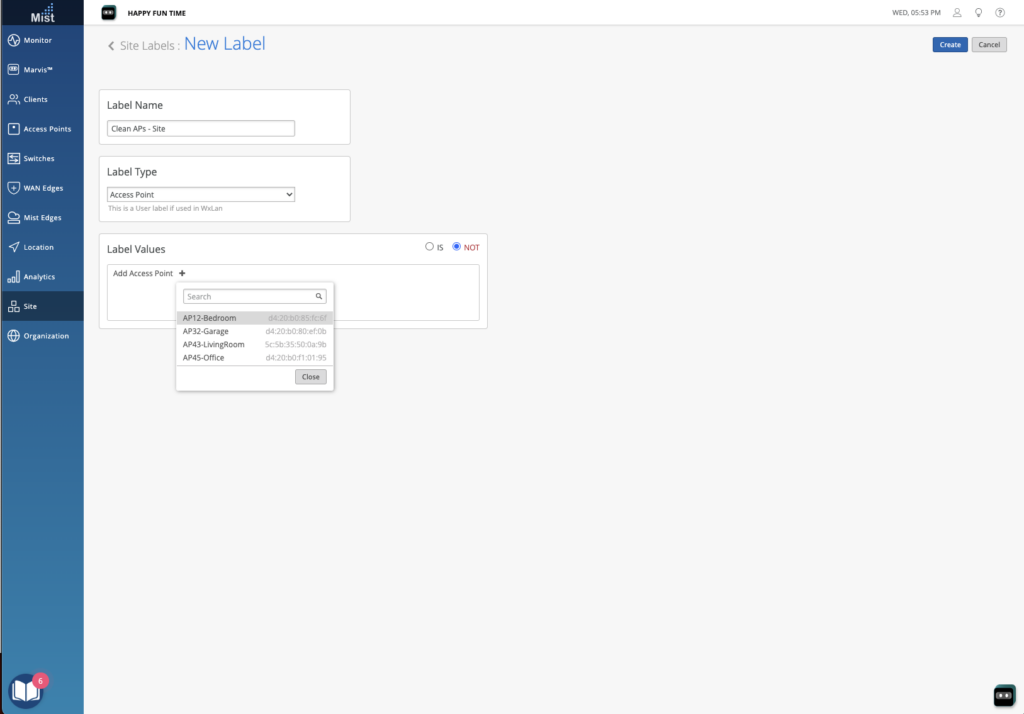

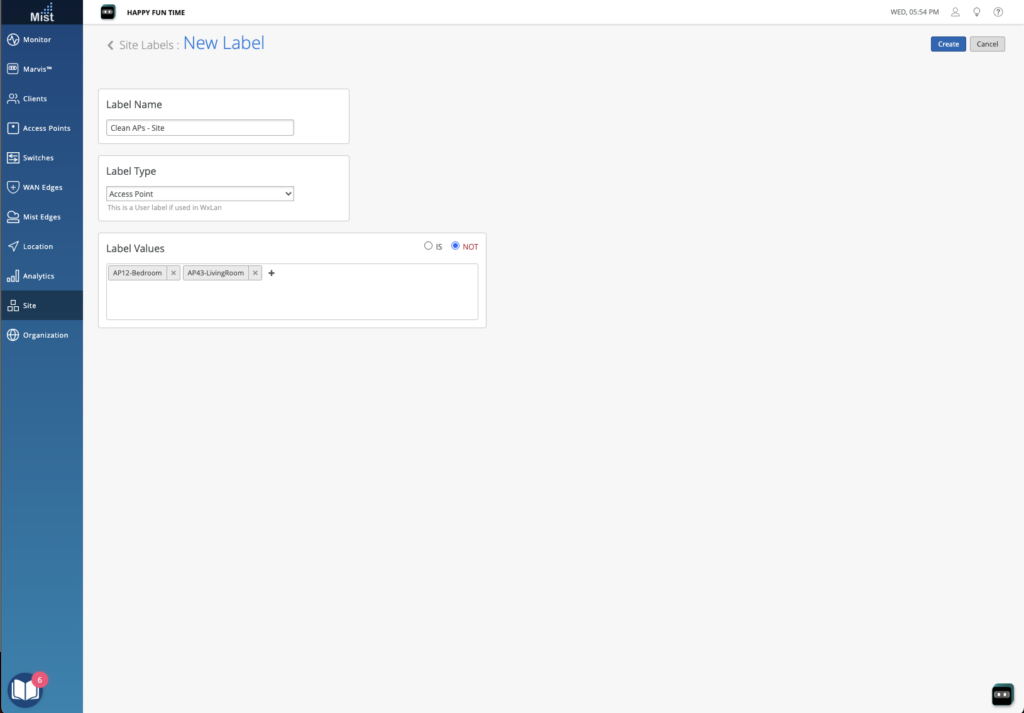

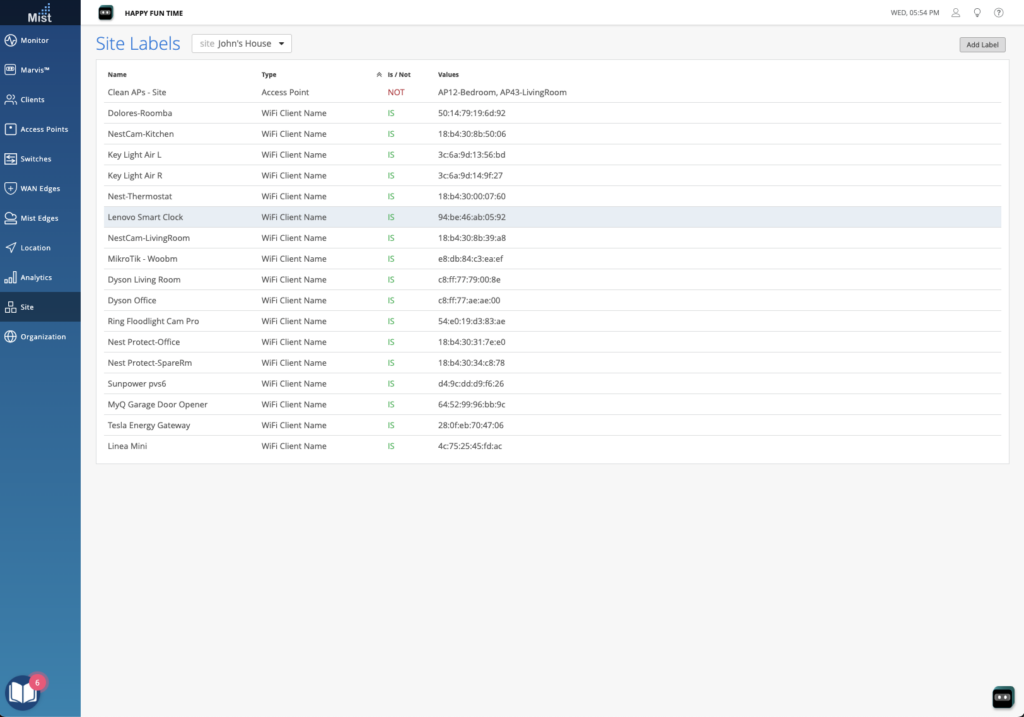

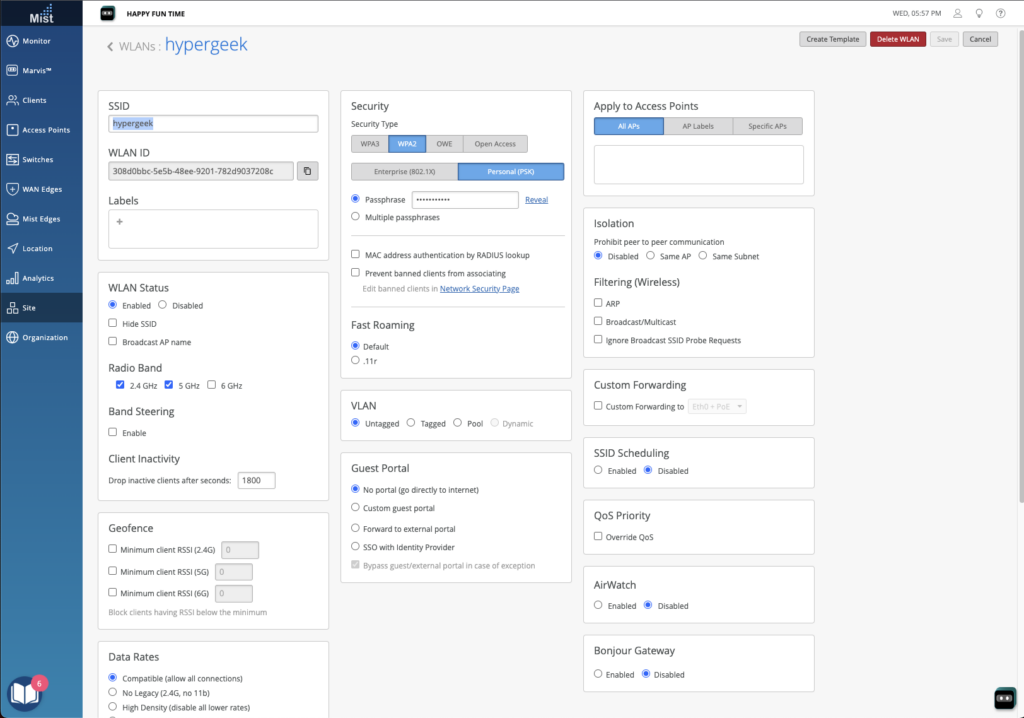

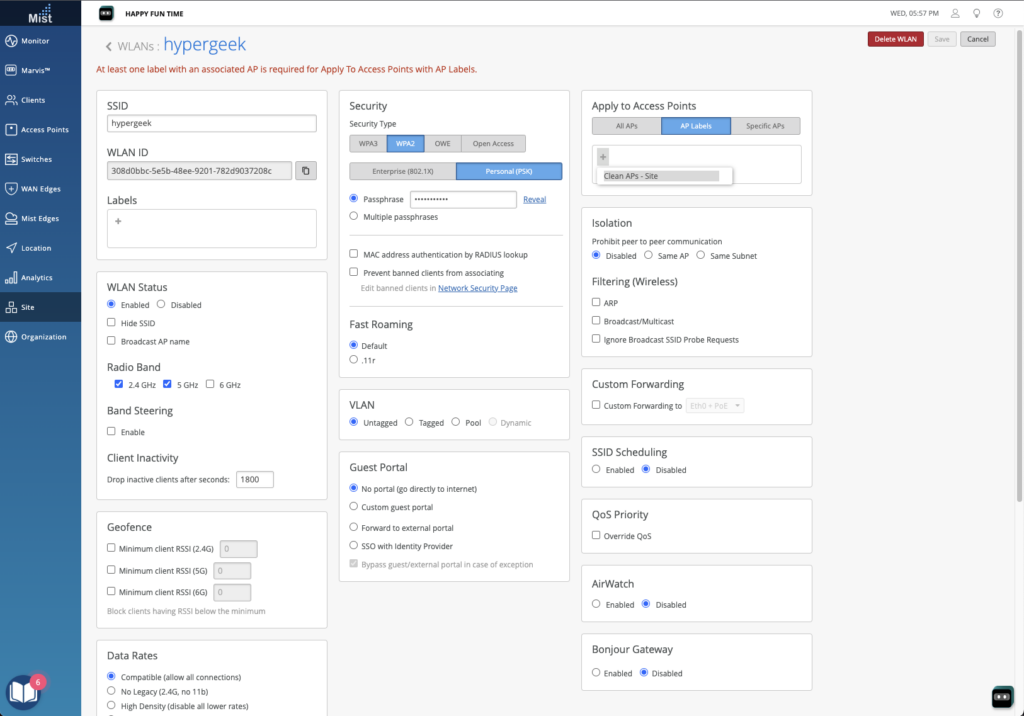

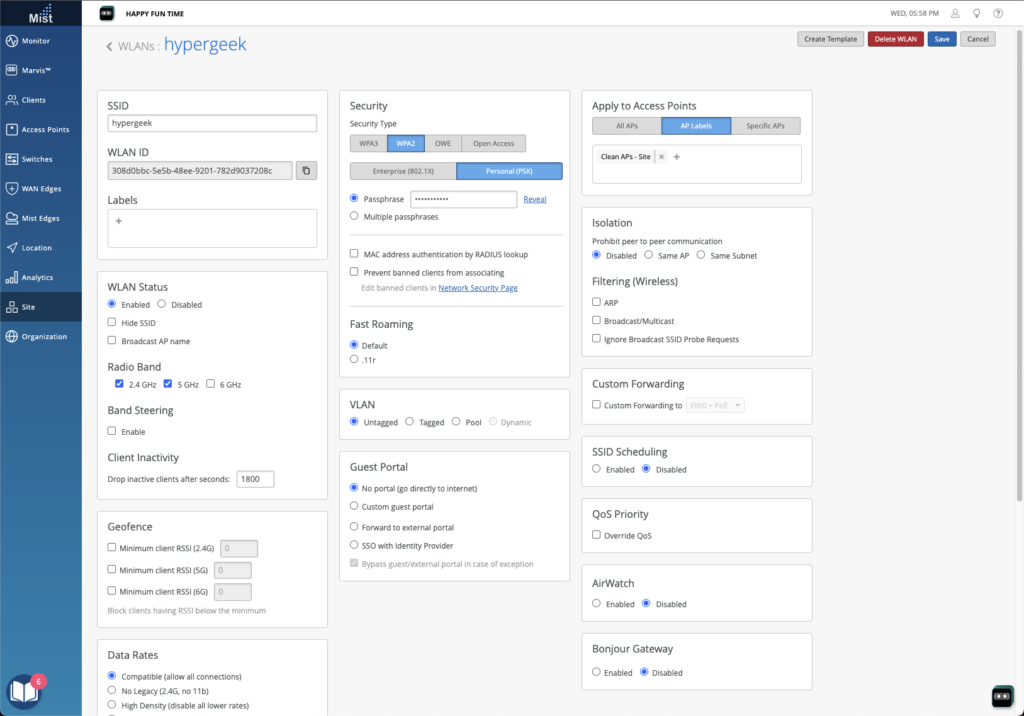

The first platform we were shown was Juniper’s Mist Access Assurance. This is clearly the result of their acquisition of WiteSand. My first impression from the limited demo that we saw was that the UI will be quite familiar to anyone who has worked with Juniper’s policy engine recently, such as with their SSR config. It felt very similar. It seems to be mostly focused on folks currently doing EAP-TLS client authentication – the marketing page says “Access Assurance provides identity fingerprinting based on X.509 certificate attributes.”. However one area where features need to be added are on endpoint profiling. The ability to talk to platforms like Intune and Jamf are required if posture enforcement (things like OS patch level, antivirus status, etc) are part of your network access policy.

Arista then showed up with CloudVision AGNI. I’m going to continue to insist that AGNI is a backronym. They claim is stands for Arista Guardian for Network Identity, but no sane PM would pick that name. (It’s still clever so I’m gonna let it slide.) In terms of functionality and UI it did seem a bit more traditional than the Juniper solution. I’m not going to call that necessarily a bonus – doing things the old way isn’t always the best way. It just seemed more familiar to an old man like me. Of interest was they had an “app store” like approach in terms of external integrations. Intune and Jamf were both demonstrated as “apps”.

Both platforms rely on RadSec for communication between Authenticators and Authentication Servers. They both also have a way to support devices that don’t do RadSec natively – for Juniper you can use a Mist Edge as a proxy and for Arista you can use one of their switches. (I’m not sure what percentage of potential Mist Access Assurance customers have Mist Edges as part of their deployment, but I’m sure that the vast majority of potential Arista AGNI customers have their switches.) This may be a minor concern – most of the devices out there can do RadSec. But you may run across some old 2924 or who knows what that needs this feature. (Kids, ask your parents.)

Neither platform seems to support TACACS. Like it or not, some of us still use TACACS for management plane authentication on large parts of our infrastructure. If I was asked to move completely off of ISE to one of these platforms that would be a problem to solve for. Yes, we can use RADIUS. It’s just something to note. Maybe my familiarity with TACACS is just another sign of my age.

Clearly, and I’m sure this will shock no one, each platform is primarily geared to provide the best experience to their own customers. Do you have Mist Wi-Fi and switching and SD-WAN? Cool. There’s a lot of interesting things you’ll be able to do. And if you have Arista switching and Wi-Fi then AGNI will be a great fit for you. How well will they integrate for customers who have multi-vendor environments? That remains to be seen.

So…why does “Cloud NAC” make me nervous?

Some of it is, I’m sure, the issue with learning a new platform. I know *what* I want my NAC platform to do but 9 times out of 10 the struggle is “How do I get the platform to do the thing I want?” (True story – just today while writing this I figured out that the problem with my policy was that I had included a period in a Device Group name in ISE and while ISE will let you do that it also will break when you try to reference that Device Group..so yay?)

Juniper here has an edge – their goal is simplification. They want it to be easy. You probably don’t need all those nerd knobs anyway and when you do they may be an API call but they’re still there. I’m sure that most AAA customers only use a fraction of the platform’s features and Juniper will make sure they cover the common use cases quite well. And Arista wants it also to be easy. But the question is will they make it easy for people who need to do complex things?

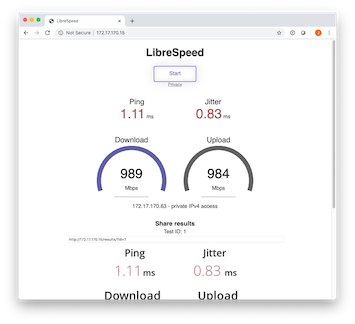

But even setting aside the inertia of having to learn a new thing there’s something about putting such a low-level resource farther away from the user that just doesn’t sit well. When I compare my enterprise WAN to the “The Internet” I know that my enterprise WAN is built around service guarantees, SLAs, and being able to manage and measure performance. Once your packet is out there on “the Internet” there are no such guarantees. Will it usually work? Sure. What can I do if it’s intermittently failing? Not much. If the peering between your ISP and the cloud vendor that your NAC is running on is congested what happens? I can’t measure that so I can’t manage it. And what impact will variable latency (called jitter by some, or packet delay variation by the pedantic such as myself) have on the user experience? I guess we’re going to find out, especially in 802.1X secured networks that don’t have Fast Transition enabled.

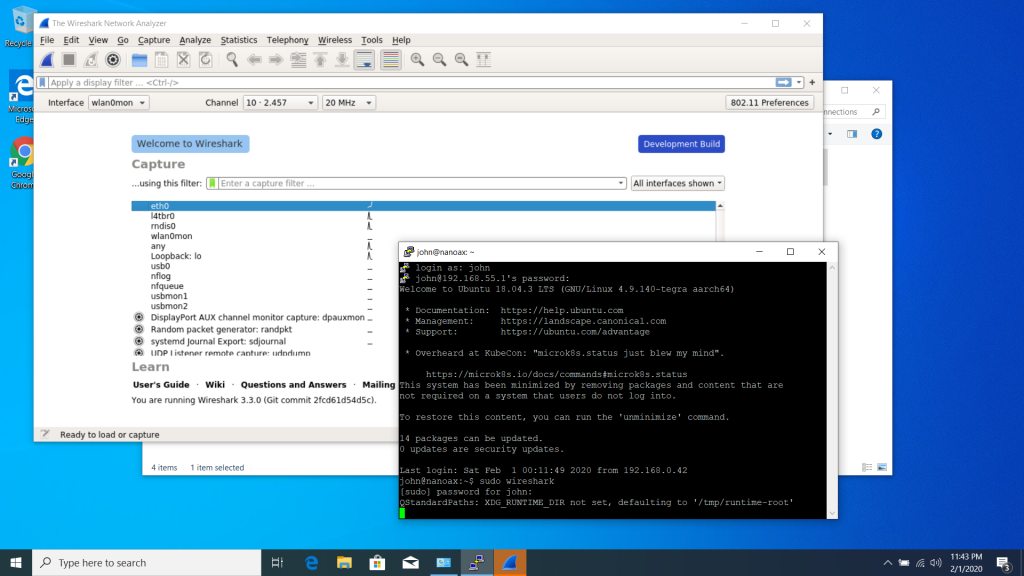

Today I can measure one-way latency. I can do packet captures on both my AP and my ISE PSN to look for packet loss. There are all sorts of fun troubleshooting tricks – things I’ve actually had to do at times – that go out the window when your NAC servers are “on the Internet”. To be fair, most of these tricks have been necessary due to misbehaving clients and not actual AAA problems, but when AAA works it’s a “network problem”, even when it’s a client problem. I generally like to have as much control of dependencies of my platforms as possible, because when they fail I’m the one dealing with it.

Just to prove that it’s not all fear, uncertainty, and doubt I will say that I can see a lot of reasons why this could be a good idea. What’s the worst part of a NAC deployment? Sizing. How many endpoints do I have? How many licenses do I need? How many servers? Appliances or VMs? Yeah, I wouldn’t miss not having to deal with all of that. Especially the licensing discussion.

At the end of the day I’m going to take a “wait and see” approach. I’ll look for a few brave reference customers who face similar challenges to mine and see what their experience is like. I’ll play with things at home and test. Because no matter how old I am I know that fighting the future is pointless. Now the question becomes – is this the future? I think we’ll find out shortly.